Artificial Intelligence & Machine Learning , Governance , Insider Threat

The Cost of 'Smart Home AI Assistants': Humans Review Audio of What People Say(euroinfosec) • July 12, 2019 Google Home speaker (Photo: Google)

Google Home speaker (Photo: Google)George Orwell's "1984" posited a world in which Big Brother monitored us constantly, in part via our "telescreens," which could not only broadcast but also record. Increasingly, however, it turns out that we're installing surveillance tools ourselves and thus inadvertently exposing not just our hands-free search requests, but also professional secrets and more intimate moments.

See Also: Webinar | The Future of Adaptive Authentication in Financial Services

On Thursday, Google confirmed that its language reviewers regularly compare computer-generated scripts for what its devices hear with the actual audio recordings to refine its language-recognition systems.

But along the way, its reviewers also hear lots of things that Google's Home smart speakers and Google Assistant smartphone app record by accident, Belgian media outlet VRT NWS first reported on Wednesday.

The report was based in part on an interview with a Google contractor who shared Dutch audio for review, which included audio that hadn't been captured by users saying their hot word - "OK Google" or "Hey Google" - or having intentionally pressed a button on their speaker or smartphone.

As VRT NWS reports: "This means that a lot of conversations are recorded unintentionally: bedroom conversations, conversations between parents and their children, but also blazing rows and professional phone calls containing lots of private information."

It notes that Google relies on thousands of employees to review audio excerpts, including about a dozen Dutch and Flemish language experts.

This isn't the first time such problems have been identified. In April, Bloomberg reported that language-analysis experts regularly reviewed a subset of commands given to Amazon's Alexa via its Echo devices.

Behind the Curtain

Responding to the VRT NWS report, Google says that building technology that can work well with the world's many different languages, accents and dialects is challenging, and notes that it devotes significant resources to refining this capability.

"This enables products like the Google Assistant to understand your request, whether you're speaking English or Hindi," David Monsees, Google's product manager for search, says in a Thursday blog post.

Google says it reviews about 0.2 percent of all audio snippets that it captures. The company declined to quantify how many audio snippets that represents on an annualized basis.

"As part of our work to develop speech technology for more languages, we partner with language experts around the world who understand the nuances and accents of a specific language," Monsees says. "These language experts review and transcribe a small set of queries to help us better understand those languages. This is a critical part of the process of building speech technology, and is necessary to creating products like the Google Assistant."

Monsees also suggests that inadvertent recordings are rare. "A clear indicator (such as the flashing dots on top of a Google Home or an on-screen indicator on your Android device) will activate any time the device is communicating with Google in order to fulfill your request," he says. "Rarely, devices that have the Google Assistant built in may experience what we call a 'false accept.' This means that there was some noise or words in the background that our software interpreted to be the hot word (like 'OK Google')."

But the VRT NWS report suggests that these visual indicators often go unnoticed, and that the threshold for triggering a hot word may be too low. Of the 1,000 excerpts reviewed by VRT NWS, it said 153 "were conversations that should never have been recorded and during which the command 'OK Google' was clearly not given."

The Self-Surveillance Age

The rise of self-surveillance is well documented (see: Fitness Dystopia in the Age of Self-Surveillance).

Today, our cellular phones ping telecommunications infrastructure on a constant basis, revealing our location to telcos and anyone else to whom they share or sell the data.

We upload photographs to Facebook and Instagram that reveal our location and interactions with friends. Social networks increasingly using facial-recognition algorithms and machine learning to identify those friends by name, while also watching the establishments we visit to provide more targeted advertising.

And we're never more than one "Hey Siri" or "OK Google" away from an immediate, hands-free answer to a question.

Who Watches the Listeners?

Many technology firms maintain that they're just providers handling anonymized data and - barring a court order in the occasional murder case - not sharing the data or recordings constantly captured by their devices with anyone else.

But it turns out that where Google's smart speakers and smartphone app are concerned, there's at least one potential weak link in the security assurance process: People who review the audio snippets to better refine the performance of these "AI home assistants."

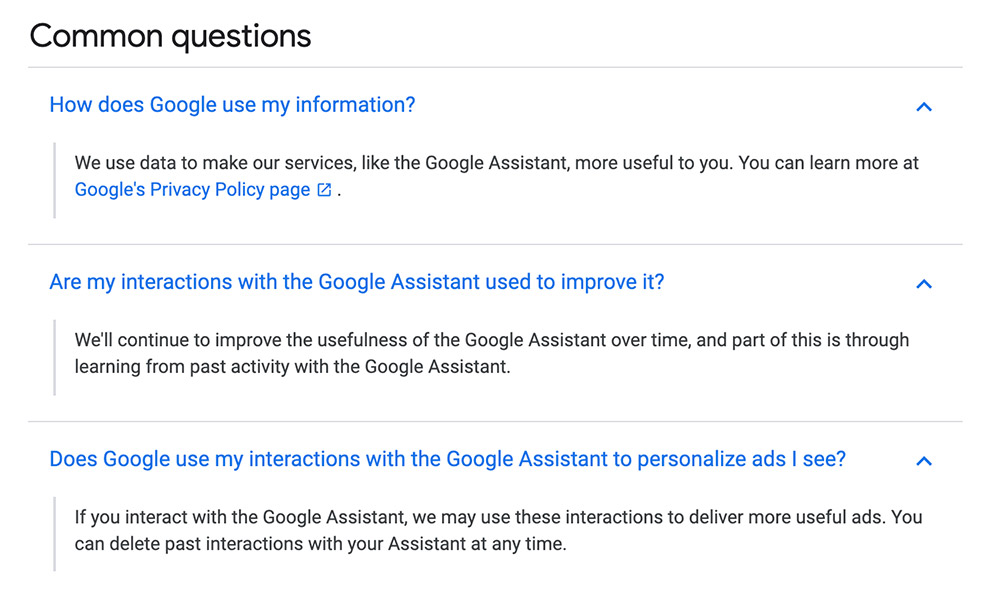

Google's "choose what to share with your Google Assistant" help page

Google's "choose what to share with your Google Assistant" help pageOf course, audio captured - inadvertently or otherwise - by "smart" home devices could potentially reveal details about individuals' actual identity. And so if humans are a necessary part of the process, then one question Google Assistant users should be asking is: How does Google vet these reviewers?

I put that question to Google, along with queries about how its audio reviewers are meant to proceed if they hear evidence of a crime, such as spouse abuse or child endangerment, and how such policies are enforced.

A spokesman declined to respond to that specific question, but says a review of its practices is underway. "We partner with language experts around the world to improve speech technology by transcribing a small set of queries - this work is critical to developing technology that powers products like the Google Assistant," he tells me. "Language experts only review around 0.2 percent of all audio snippets, and these snippets are not associated with user accounts as part of the review process. We just learned that one of these reviewers has violated our data security policies by leaking confidential Dutch audio data. Our security and privacy response teams have been activated on this issue, are investigating, and we will take action. We are conducting a full review of our safeguards in this space to prevent misconduct like this from happening again."

Risk Management for AI Home Assistants

For information security experts, there's a further takeaway from this story: You need to add the likes of Google Assistant and Amazon Alexa to your threat model.

U.K.-based Kevin Beaumont, who works as an information security manager, says he uses Alexa precisely because his employees use these types of devices too. "I secure organizations for a living; it's easier if I live the same world as the people I'm working with to secure said things," Beaumont tweets.

Beaumont says becoming better versed in the IoT world is akin to making the jump from Linux to Windows. "For my first job in '99 I was a pure Linux kid; I didn't use Windows," he says. "I quickly realized I had no concept of risk management for business as I didn't know what they were doing."

Welcome to our brave new IoT-enabled, social-media-documented, perpetually connected world.