Fraud News

3rd Party Risk Management , Governance , Privacy

Settlement Stems From Cambridge Analytica Incident(@Ferguson_Writes) • July 12, 2019

(Watch for updates on this developing story.)

See Also: 10 Incredible Ways You Can Be Hacked Through Email & How To Stop The Bad Guys

After a long privacy investigation, the U.S. Federal Trade Commission voted to levy a $5 billion fine against Facebook, according to the Washington Post and the Wall Street Journal.

The FTC voted 3-2 to approve the settlement, with three Republican members voting in favor and the two Democratic members voting against it, according to the news reports. The U.S. Justice Department must approve any final settlement.

For months, the FTC and Facebook have been negotiating a settlement over whether the social network violated a 2012 agreement with the agency. The FTC investigation was launched as a result of the Cambridge Analytica controversy. The now-defunct voter-profiling firm improperly obtained profile data for 87 million Facebook users without their consent (see: Facebook and Cambridge Analytica: Data Scandal Intensifies).

During its first-quarter earnings call, Facebook noted it would set aside about $3 billion in anticipation of the FTC fine. Executives also noted during that call that the final number could be as high as $5 billion (see: Facebook Takes $3 Billion Hit, Anticipating FTC Fine).

A Facebook spokesperson told Information Security Media Group that the company is not commenting on the reports of a settlement.

Largest FTC Fine

A $5 billion fine would be the largest ever imposed by the FTC; the previous record is a $22.5 million fine imposed on Google in 2012.

The Cambridge Analytica scandal began when a Cambridge University researcher, Aleksandr Kogan, deployed a personality quiz on Facebook in late 2013. The quiz collected information for not only people who took the quiz, but also their friends.

Kogan passed the data to Cambridge Analytica, which Facebook contended was against its rules. The sharing of personal information appears to have violated the 2012 agreement between the FTC and Facebook.

Cybercrime , Fraud Management & Cybercrime , Governance

Unsealed Indictment Describes Alleged Insider Theft Scenario(@Ferguson_Writes) • July 12, 2019 Xudong Yao is believed to be living in China (Image: FBI)

Xudong Yao is believed to be living in China (Image: FBI)A former software engineer for an Illinois-based locomotive manufacturer allegedly stole proprietary information and other intellectual property from the company before fleeing to China, according to an indictment the U.S. Justice Department unsealed Thursday.

See Also: 10 Incredible Ways You Can Be Hacked Through Email & How To Stop The Bad Guys

Xudong Yao, 57, has been indicted on nine federal counts of theft of trade secrets, according to the U.S. Attorney's Office for the Northern District of Illinois, which is overseeing the case along with the FBI. Yao, who also used the first name "William," is believed to be living in China, according to federal prosecutors.

During his time with the company, Yao allegedly downloaded thousands of computer files and other documents that contained various company trade secrets and intellectual property, including data related to the system that operates the unnamed manufacturer's locomotives, according to the indictment.

While Yao was taking his former employer's intellectual property, he was negotiating for a new job with a firm in China that provided automotive telematics service systems, the Justice Department alleges. Yao was born in China, but he's a naturalized U.S. citizen, according to the FBI.

Theft of trade secrets is a federal crime that carriers a possible 10-year prison sentence for each count, according to the Justice Department. It's unclear whether Yao will ever return to the U.S. to face the charges. China law does not allow extradition of its citizens.

Deception From the Start

The locomotive firm in suburban Chicago hired Yao in August 2014 as a software engineer, prosecutors say. Yao's alleged theft of company secrets and data started almost immediately, the indictment says.

After two weeks on the job, Yao downloaded more than 3,000 electronic files from the company that included about the systems that ran the company's locomotives, prosecutors allege.

Over the next six months, Yao allegedly continued to secretly download documents and intellectual property from the company, including more technical details as well as source code, according to the indictment. At the time he was taking these files, Yao was also negotiating for a new job at the Chinese firm, authorities allege.

In February 2015, Yao was fired from his job at the Illinois locomotive company, according to prosecutors. At the time, his former employer was not aware that Yao allegedly had downloaded and stole thousands of documents and files, authorities say.

In July 2015, Yao made copies of the files and documents and traveled to China to start his new job there, according to the indictment. In November, he made one final trip back to Chicago, traveling through O'Hare International Airport with "nine copies of the Chicago company's control system source code and the systems specifications that explained how the code worked," the indictment alleges. Yao then traveled back to China and has remained there since, prosecutors say.

In December 2017, a federal grand jury in Chicago indicted Yao on the nine charges of theft of trade secrets. That indictment remained sealed until this week.

Malicious Insider

Verizon's 2019 Data Breach Investigation Report found that that nearly 20 percent of cybersecurity incidents and 15 percent of the data breaches in 2018 involved employees working within a company. And while that covers both careless and malicious activity, these types of insider threats are a growing concern for companies of all sizes, says Terence Jackson, the CISO of Washington-based security firm Thycotic Software.

The Verizon report notes that malicious insider behavior has increased at least 50 percent since 2015.

"The indictment lists multiple instances where the malicious insider downloaded massive amounts of documents, and it seems that no one was able to detect these actions early on," Jackson says. "Enterprises should be performing data classification to first identify and classify highly sensitive data and intellectual property."

Aggressive Prosecutions

Over the last several months, the Justice Department has announced several pending cases or convictions connected to China involving and involving intellectual property.

For instance, on Tuesday, a federal judge sentenced a former U.S. State Department employee to more than three years in prison and a $40,000 fine for accepting cash and gifts from Chinese intelligence agents in exchange for information, according to Fox News.

In November 2018, the Justice Department unsealed an indictment charging a Chinese state-owned firm and its Taiwan partner for allegedly stealing trade secrets from U.S. chip maker Micron Technology, according to news reports.

In the wake of digital transformation, there remain some organizations that - for security reasons - resist the temptation to move to the cloud. What are their objections? Zscaler's Bil Harmer addresses these, as well as the critical questions security leaders should ask of cloud service providers.

Harmer, CISO of the Americas for Zscaler, says the cloud is a game-changer in terms of making security leaders shift their focus from thinking about security as an exercise in protecting solely what they have on-premise.

"Security leaders really need to take that mental step back and say 'how do I adapt to the new environment and avoid the idea of I'm going to make the vendor work within my confine,' because that's just a recipe for disaster," Harmer says.

In an interview about cloud security, Harmer discusses:

Why some security leaders still hold out against the cloud; Critical steps to initiate the transition; Essential questions to ask of cloud service providers.Harmer has been in the IT industry for 30 years. He has been at the forefront of the Internet since 1995 and his work in security began in 1998. He has led security for startups, Government and well established financial institutions. In 2007 he pioneered the use of the SAS70 coupled with ISO to create a trusted security audit methodology used by the SaaS industry until the introduction of the SOC2. He has presented on security and privacy in Canada, Europe and the US at conferences such as RSA, ISSA, GrrCon and the Cloud Security Alliance. His vision and technical abilities have been used on advisory boards for Adallom, Trust Science, ShieldX, Resolve and Integris. He has served as Chief Security Officer for GoodData, VP Security & Global Privacy Officer for the Cloud Division of SAP and now serves as the Americas CISO for Zscaler.

Fraud Management & Cybercrime , Ransomware

Attackers Demand Bitcoin Ransom After Encrypting Data(asokan_akshaya) • July 11, 2019

A new ransomware strain called eCh0raix is targeting enterprise storage devices sold by QNAP Network by exploiting vulnerabilities in the gear and bypassing weak credentials using brute-force techniques, according to the security firm Anomali.

See Also: 10 Incredible Ways You Can Be Hacked Through Email & How To Stop The Bad Guys

The ransomware is targeting QNAP's line of enterprise-grade network attached storage devices that are used for file storage and backup because these devices aren't coupled with anti-virus software, Anomali says in a blog.

The file-locking malware first surfaced in late June, when victims reported ransom demands on the BleepingComputer forum thread. The website forum identified the affected storage devices as the QNAP TS-251, QNAP TS-451, QNAP TS-459 Pro II, and the QNAP TS 253B.

The infected systems were not fully patched, and others reported detections of failed login attempts, according to posts on the BleepingComputer forum as well as the Anomali blog.

QNAP is a Taiwan-based storage service company that focuses on network area storage file sharing, virtualization and surveillance applications. In the U.S., the company is believed to have 19,000 publicly facing QNAP devices, which could be susceptible to this particular strain of ransomware, the Anomali researchers note.

QNAP could not be immediately reached for comment.

This is the second time this year that malware has been discovered on QNAP's NAS devices. In February, the company issued a security alert stating that an unknown strain of malware was disabling software updates within its devices, leaving them vulnerable to further attacks.

Targeted Attacks

The new eCh0raix ransomware was written and compiled using the Go programming language, and its source code is composed of a miniscule 400 lines, according to the Anomali research.

The ransomware has been designed to carry out targeted attacks by encrypting file extensions on a network area storage device using AES encryption and by appending the ".encrypt" extension, Anomali reports.

The eCh0raix ransomware has a low detection rate in anti-virus products, the researchers note.

"It is not common for these devices to run anti-virus products, and currently the samples are only detected by two to three products on VirusTotal, which allows the ransomware to run uninhibited," the researchers write in their blog.

In addition, the analysis of the hard-coded encryption keys of the malware samples revealed that the same decryptor would not work for all victims, the blog notes.

Those who were attacked were notified that their data was locked and were directed to make a ransom payment in bitcoin and not to meddle or tamper with the code.

Region of Origin?

In addition, the researchers examined the command-and-control server associated with the ransomware and noted that the malware checks the location of the infected NAS devices for IP addresses in Belarus, Ukraine or Russia and will then exit without further incident if a match is found.

"This technique is common amongst threat actors, particularly when they do not wish to infect users in their home country," according to the Anomali blog.

To protect against these types of attacks, the researchers recommend that organizations restrict external access to QNAP storage devices, ensure the devices are updated with security patches and use strong credentials.

Ransomware Increasing

Ransomware attacks are on the rise, with attackers increasingly targeting government agencies that seem ill-prepared to cope (see: More US Cities Battered by Ransomware).

In several recent cases, including one ransomware attack that hit Lake City, Florida, it appears that these municipal governments did not have adequate backup to help recover once critical files were locked. This resulted in some communities opting to pay a ransom to get the decryption key (see: Second Florida City Pays Up Following Ransomware Attack).

The success of security operations centers will depend on how well they blend key technologies, including detection, user behavior analytics and orchestration, says Haiyan Song, senior vice president and general manager of security market at Splunk.

Some 90 percent of the tier 1 work that security teams do should could be automated, Song says in an interview with Information Security Media Group. If so, teams could then spend more than 50 percent of their time on tasks that require human reasoning and intuition. But that requires administrators to bring data together in a way that's clear and actionable.

"If you want to automate, you need one place," she says.

In this interview (see audio link below photo), Song also discusses:

How organizations are automating their security responses; Where organizations need to improve to close the gap between detection of an adversary and taking action; How artificial intelligence applied to security will depend on better feedback loops.Song is senior vice president and general manager of security market for Splunk. She previously was vice president and general manager of HP ArcSight, where she also served as vice president of engineering.

Artificial Intelligence & Machine Learning , Governance , Insider Threat

The Cost of 'Smart Home AI Assistants': Humans Review Audio of What People Say(euroinfosec) • July 12, 2019 Google Home speaker (Photo: Google)

Google Home speaker (Photo: Google)George Orwell's "1984" posited a world in which Big Brother monitored us constantly, in part via our "telescreens," which could not only broadcast but also record. Increasingly, however, it turns out that we're installing surveillance tools ourselves and thus inadvertently exposing not just our hands-free search requests, but also professional secrets and more intimate moments.

See Also: Webinar | The Future of Adaptive Authentication in Financial Services

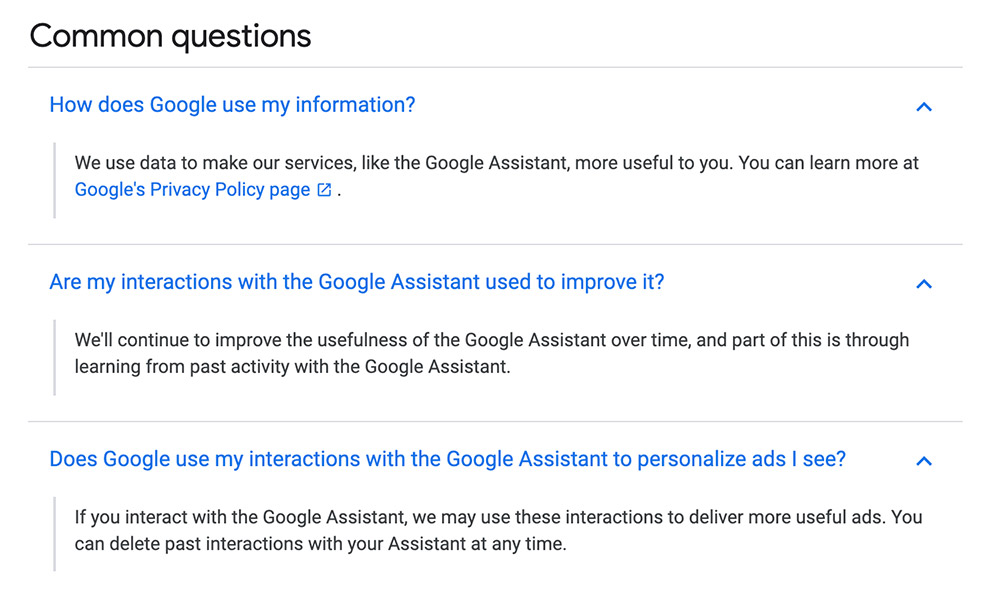

On Thursday, Google confirmed that its language reviewers regularly compare computer-generated scripts for what its devices hear with the actual audio recordings to refine its language-recognition systems.

But along the way, its reviewers also hear lots of things that Google's Home smart speakers and Google Assistant smartphone app record by accident, Belgian media outlet VRT NWS first reported on Wednesday.

The report was based in part on an interview with a Google contractor who shared Dutch audio for review, which included audio that hadn't been captured by users saying their hot word - "OK Google" or "Hey Google" - or having intentionally pressed a button on their speaker or smartphone.

As VRT NWS reports: "This means that a lot of conversations are recorded unintentionally: bedroom conversations, conversations between parents and their children, but also blazing rows and professional phone calls containing lots of private information."

It notes that Google relies on thousands of employees to review audio excerpts, including about a dozen Dutch and Flemish language experts.

This isn't the first time such problems have been identified. In April, Bloomberg reported that language-analysis experts regularly reviewed a subset of commands given to Amazon's Alexa via its Echo devices.

Behind the Curtain

Responding to the VRT NWS report, Google says that building technology that can work well with the world's many different languages, accents and dialects is challenging, and notes that it devotes significant resources to refining this capability.

"This enables products like the Google Assistant to understand your request, whether you're speaking English or Hindi," David Monsees, Google's product manager for search, says in a Thursday blog post.

Google says it reviews about 0.2 percent of all audio snippets that it captures. The company declined to quantify how many audio snippets that represents on an annualized basis.

"As part of our work to develop speech technology for more languages, we partner with language experts around the world who understand the nuances and accents of a specific language," Monsees says. "These language experts review and transcribe a small set of queries to help us better understand those languages. This is a critical part of the process of building speech technology, and is necessary to creating products like the Google Assistant."

Monsees also suggests that inadvertent recordings are rare. "A clear indicator (such as the flashing dots on top of a Google Home or an on-screen indicator on your Android device) will activate any time the device is communicating with Google in order to fulfill your request," he says. "Rarely, devices that have the Google Assistant built in may experience what we call a 'false accept.' This means that there was some noise or words in the background that our software interpreted to be the hot word (like 'OK Google')."

But the VRT NWS report suggests that these visual indicators often go unnoticed, and that the threshold for triggering a hot word may be too low. Of the 1,000 excerpts reviewed by VRT NWS, it said 153 "were conversations that should never have been recorded and during which the command 'OK Google' was clearly not given."

The Self-Surveillance Age

The rise of self-surveillance is well documented (see: Fitness Dystopia in the Age of Self-Surveillance).

Today, our cellular phones ping telecommunications infrastructure on a constant basis, revealing our location to telcos and anyone else to whom they share or sell the data.

We upload photographs to Facebook and Instagram that reveal our location and interactions with friends. Social networks increasingly using facial-recognition algorithms and machine learning to identify those friends by name, while also watching the establishments we visit to provide more targeted advertising.

And we're never more than one "Hey Siri" or "OK Google" away from an immediate, hands-free answer to a question.

Who Watches the Listeners?

Many technology firms maintain that they're just providers handling anonymized data and - barring a court order in the occasional murder case - not sharing the data or recordings constantly captured by their devices with anyone else.

But it turns out that where Google's smart speakers and smartphone app are concerned, there's at least one potential weak link in the security assurance process: People who review the audio snippets to better refine the performance of these "AI home assistants."

Google's "choose what to share with your Google Assistant" help page

Google's "choose what to share with your Google Assistant" help pageOf course, audio captured - inadvertently or otherwise - by "smart" home devices could potentially reveal details about individuals' actual identity. And so if humans are a necessary part of the process, then one question Google Assistant users should be asking is: How does Google vet these reviewers?

I put that question to Google, along with queries about how its audio reviewers are meant to proceed if they hear evidence of a crime, such as spouse abuse or child endangerment, and how such policies are enforced.

A spokesman declined to respond to that specific question, but says a review of its practices is underway. "We partner with language experts around the world to improve speech technology by transcribing a small set of queries - this work is critical to developing technology that powers products like the Google Assistant," he tells me. "Language experts only review around 0.2 percent of all audio snippets, and these snippets are not associated with user accounts as part of the review process. We just learned that one of these reviewers has violated our data security policies by leaking confidential Dutch audio data. Our security and privacy response teams have been activated on this issue, are investigating, and we will take action. We are conducting a full review of our safeguards in this space to prevent misconduct like this from happening again."

Risk Management for AI Home Assistants

For information security experts, there's a further takeaway from this story: You need to add the likes of Google Assistant and Amazon Alexa to your threat model.

U.K.-based Kevin Beaumont, who works as an information security manager, says he uses Alexa precisely because his employees use these types of devices too. "I secure organizations for a living; it's easier if I live the same world as the people I'm working with to secure said things," Beaumont tweets.

Beaumont says becoming better versed in the IoT world is akin to making the jump from Linux to Windows. "For my first job in '99 I was a pure Linux kid; I didn't use Windows," he says. "I quickly realized I had no concept of risk management for business as I didn't know what they were doing."

Welcome to our brave new IoT-enabled, social-media-documented, perpetually connected world.